ANOTHER CORRECTION: First, I failed again on Saturday to provide a working link to the video of Rob and Pam, extremists posing as reasonable candidates for the school board for Central Valley School District. They are seen holding a meeting after a board meeting they helped shut down because they had refused to wear masks. (See last Friday’s post, A School Board Takeover in the Making). For the proper working link to that featured video click here.

MAIN POST: I have long been interested in how we know what we think we know (epistemology). I tried to write about epistemology and conspiracy theories in a post, Trust and Knowledge, a couple of years ago, but my writing pales next to Doug Muder’s thoughtful analysis in last Monday’s, September 13’s, Weekly Sift, On Doing Your Own Research. I’ve copied and pasted his post below. Once again, I highly recommended signing up to receive the one to three emails he sends out nearly every Monday morning. (Sign up here in the left hand column.) I look forward to his level-headed analysis every week.

Keep to the high ground,

Jerry

On Doing Your Own Research

by weeklysift

It's easy to laugh at the conspiracy theorists. But our expert classes aren't entitled to blind trust.

One common mantra among anti-vaxxers, Q-Anoners, ivermectin advocates, and conspiracy theorists of all stripes is that people need to "do their own research". Don't be a sheep who believes whatever the CDC or the New York Times or some other variety of "expert" tells you. If something is important, you need to look into it yourself.

Recently, I've been seeing a lot of pushback memes. This one takes a humorous poke at the inflated view many people have of their intellectual abilities.

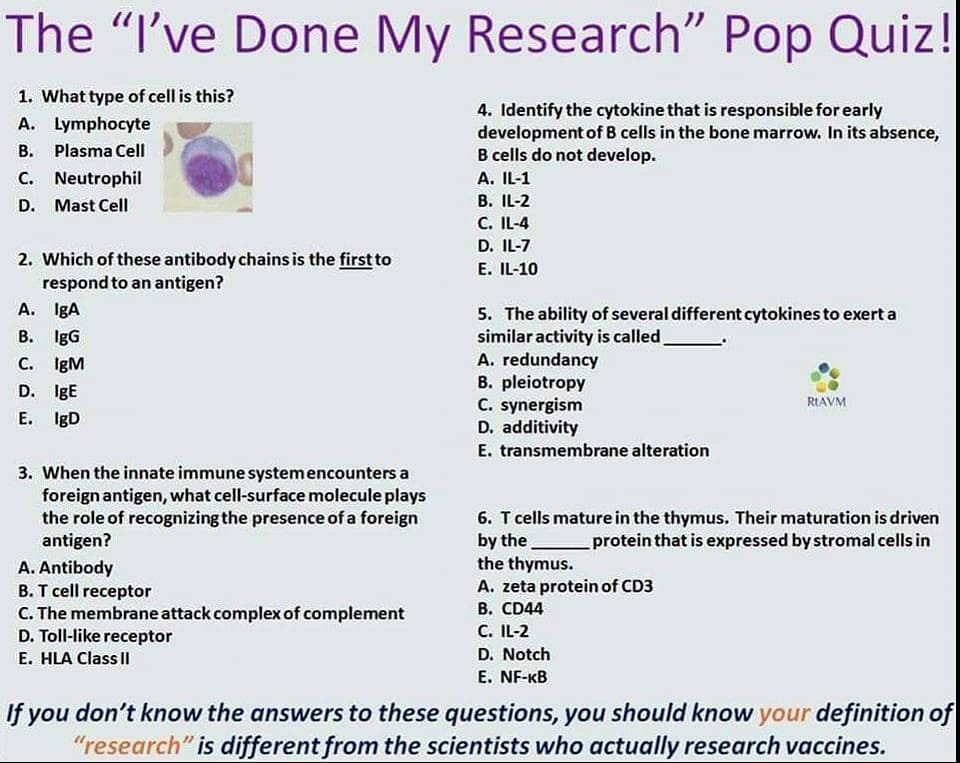

While this one is a bit more intimidating:

And this one is pretty in-your-face:

I understand and mostly agree with the point these memes are trying to make: There is such a thing as expertise, and watching a YouTube video is no substitute for a lifetime of study. In fact, few ideas are so absurd that you can't make a case for them that is good enough to sound convincing for half an hour -- as I remember from reading Erich von Daniken's "ancient astronaut" books back in the 1970s.

Medical issues are particularly tricky, because sometimes people just get well (or die) for no apparent reason. Whatever they happened to be doing at the time looks brilliant (or stupid), when in fact it might have had nothing to do with anything. That's why scientists invented statistics and double-blind studies and so forth -- so they wouldn't be fooled by a handful of fluky cases, or by their own desire to see some pattern that isn't really there.

All the same, I cringe when one of these memes appears on my social media feed, because I know how they'll be received by the people they target. The experts are telling them: "Shut up, you dummy, and believe what you're told."

They're going to take that message badly, and I actually don't blame them. Because there is a real crisis of expertise in the world today, and it didn't appear out of nowhere during the pandemic. It's been building for a long time.

Liberal skepticism. Because the Trump administration was so hostile to expertise, we now tend to think of viewing experts skeptically as a left/right issue. But it's not. Go back, for example, and look at liberal Chris Hayes' 2012 book The Twilight of the Elites. Each chapter of that book covers a different area in which some trusted corps of experts failed the public that put its faith them: Intelligence experts (and the journalists who covered them) assured us that Saddam had weapons of mass destruction. Bankers drove the world economy into a ditch in 2008, largely because paper that turned out to be worthless was rated AAA. The Catholic priesthood, supposedly a guardian of morality for millions of Americans, was raping children and then covering it up.

Experts, it turns out, do have training and experience. But they also have class interests. Sometimes they're looking out for themselves rather than for the rest of us.

More recently, we have discovered that military experts have been lying to us for years about the "progress" they'd made in promoting Afghan democracy and training an Afghan army to defend that democratic government.

It's not hard to find economists who present capitalism as the only viable option for a modern economy, or who explain why we can't afford to take care of all the sick people, or to prevent climate change from producing some apocalyptic future.

Such people are very good at talking down to the rest of us. But ordinary folks are less and less likely to take them seriously. And that's good, sort of. You shouldn't believe what people say just because they have a title or a degree.

If not expertise, what? So it's not true that if you argue with a recognized expert, you're automatically wrong. Unfortunately, though, recent events have shown us that a reflexive distrust of all experts creates even worse problems.

It's hard to estimate how many Americans have died of Covid because we haven't been willing to follow expert advice about vaccination, masking, quarantining, and so on. Constructing such an estimate would itself require expertise I don't have. But simply comparing our death totals to Canada's (713 deaths per 100K people versus our 2034) indicates it's probably in the hundreds of thousands.

Our democracy is in trouble because large numbers of Americans are unwilling to accept election results, no matter how many times they get recounted by bipartisan panels of election supervisors.

The growing menace of hurricanes and wildfires is the price we pay because the world (of which the US is a major part, and needs to play a leading role) refuses to act on what climate scientists have been telling us since the 1970s.

Without widespread belief in experts, the truth becomes a matter of tribalism (one side believes in fighting Covid and the other doesn't), intimidation (Republicans who know better don't dare tell Trump's personality cult that he lost), or wishful thinking (nobody wants to believe we have to change our lives to cut carbon emissions).

Which one of us is Galileo? The foundational myth of modern science (Galileo saying "and yet it moves") expresses faith in a reality beyond the power of kings and popes. People who have trained their minds to be objective can see that reality, while others are stuck either following or rebelling against authority.

The question is: Who is Galileo in the current controversies? Is it the scientific experts who have spent their lives training to see clearly in these situations? Or is it the populists, who refuse to bow to the authority of the expert class, and insist on "doing their own research"?

Simply raising that question points to a more nuanced answer than just "Shut up and believe what you're told."

Take me, for example. This blog arises from distrust of experts. After the Saddam's-weapons-of-mass-destruction fiasco, I started looking deeper into the stories in the headlines. Because I was living in New Hampshire at the time, it was easy to go listen to the 2004 presidential candidates. Once I did, I noticed the media's habit of fitting a speech into a predetermined narrative, rather than reporting what a candidate was actually saying. Then I started reading major court decisions (like the Massachusetts same-sex marriage decision of 2003), and interpreting them for myself.

In short, I was doing my own research. Some guy at CNN may have spent his whole life reporting on legal issues, but I was going to read the cases for myself.

When social media became a thing, and turned into an even bigger source of misinformation than the mainstream media had ever been, I began to look on this blog as a model for individual behavior: Don't amplify claims without some amount of checking. (For example: In this weeks' summary -- the next post after this one -- I was ready to blast Trump for ignoring all observances of 9-11. But then I discovered that he appeared by video at a rally organized by one of his supporters on the National Mall. I'm not shy about criticizing Trump, but facts are facts.) Listen to criticism from commenters and thank them when they catch one of your mistakes. Change your opinions when the facts change.

But also notice the things that I don't do: When my wife got cancer, we didn't design her treatment program by ourselves. We made value judgments about what kinds of sacrifices we were willing to make for her treatment (a lot, as it turned out), but left the technical details to our doctors. At one point we felt that a doctor was a little too eager to get my wife into his favorite clinical trial, so we got a second opinion and ultimately changed doctors. But we didn't ditch Western medicine and count on Chinese herbs or something. (She's still doing fine 25 years after the original diagnosis.)

On this blog, I may not trust the New York Times and Washington Post to decide what stories are important and what they mean, but I do trust them on basic facts. If the NYT puts quotes around some words, I believe that the named person actually said those words (though I may check the context). If the WaPo publishes the text of a court decision, I believe that really is the text. And so on.

I also trust the career people in the government to report statistics accurately. The political appointees may spin those numbers in all sorts of ways, but the bureaucrats in the cubicles are doing their best.

In the 18 years I've been blogging, that level of trust has never burned me.

Where I come from. So the question isn't "Do you trust anybody?" You have to; the world is just too big to figure it all out for yourself. Instead, the question is who you trust, and what you trust them to do.

My background gives me certain advantages in answering those questions, because I have a foot in both camps. Originally, I was a mathematician. I got a Ph.D. from a big-name university and published a few articles in some prestigious research journals (though not for many years now). So I understand what it means to do actual research, and to know things that only a handful of other people know. At the same time, I am not a lawyer, a doctor, a political scientist, an economist, a climate scientist, or a professional journalist. So just about everything I discuss in this blog is something I view from the outside.

I don't, for example, have any inside knowledge about public health or infectious diseases or climate science. But I do know a lot about the kind of people who go into the sciences, and about the social mores of the scientific community. So when I hear about some vast conspiracy to inflate the threat of Covid or climate change, I can only shake my head. I can picture how many people would necessarily be involved in such a conspiracy, and who many of them would have to be. It's absurd.

In universities and labs all over the world, there are people who would love to be the one to expose the "hoax" of climate change, or to discover the simple solution that means none of us have to change our lifestyle. You couldn't shut them up by shifting research funding, you'd need physical concentration camps, and maybe gas chambers. The rumors of people vanishing into those camps would spread far enough that I would hear them.

I haven't.

Not all experts deserve our skepticism. Similarly, one of my best friends and two of my cousins are nurses. I know the mindset of people who go into medicine. So the idea that hospitals all over the country are faking deaths by the hundreds of thousands, or that ICUs are only pretending to be jammed with patients -- it's nuts.

If you've ever planned a surprise party, you know that conspiracies of just a dozen or so people can be hard to manage. Now imagine conspiracies that involve tens of thousands, most of whom were once motivated by ideals completely opposite to the goals of the conspiracy.

It doesn't happen.

I have a rule of thumb that has served me well over the years: You don't always have to follow the conventional wisdom, but when you don't you should know why.

Lots of expert classes have earned our distrust. But some haven't. They're not all the same. And even the bankers and the priests have motives more specific than pure evil. If they wouldn't benefit from some conspiracy, they're probably not involved.

Know thyself. As you divide up the world between things you're going to research yourself and things you're going to trust to someone else, the most important question you need to answer is: What kind of research can you reasonably do? (Being trained to read mathematical proofs made it easy for me to read judicial opinions. I wouldn't have guessed that, but it turned out that way.)

That's what's funny about the cartoon at the top: This guy thinks he credibly competes with the entire scientific community (and expects his wife to share that assessment of his abilities).

My Dad (who I think suspected from early in my life that he was raising a know-it-all) often said to me: "Everybody in the world knows something you don't." As I got older, I realized that the reverse is also true: Just about all of us have some experience that gives us a unique window on the world. You don't necessarily need a Ph.D. to see something most other people miss.

But at the same time, often our unique windows point in the wrong direction entirely. My window, for example, tells me very little about what Afghans are thinking right now. If I want to know, I'm going to have to trust somebody a little closer to the topic.

And if I'm going to be a source of information rather than misinformation, I'll need to account for my biases. Tribalism, intimidation, and wishful thinking affect everybody. A factoid that matches my prior assumptions a little too closely is exactly the kind of thing I need to check before I pass it on. Puzzle pieces that fit together too easily have maybe been shaved a little; check it out.

So sure: Do your own research. But also learn your limitations, and train yourself to be a good researcher within those boundaries. Otherwise, you might be part of the problem rather than part of the solution.

weeklysift | September 13, 2021 at 10:39 am | Tags: culture wars | Categories: Articles | URL: https://wp.me/p1F9Ho-7Yb